Executive Summary

Oracle SOA Suite provides a generic fault management framework for handling faults in BPEL processes. If a fault occurs during runtime in an invoke activity in a process, the framework catches the fault and performs a user-specified action defined in a fault policy file associated with the activity. If a fault results in a condition in which human intervention is the prescribed action, you perform recovery actions from Oracle Enterprise Manager Fusion Middleware Control. The fault management framework provides an alternative to designing a BPEL process with catch activities in scope activities.

Fault Handling in SOA Suite

There are two categories of Fault in SOA Suite.

1>Runtime Fault

Runtime faults are the result of logic errors in programming (such as an endless loop); a Simple Object Access Protocol (SOAP) fault occurs in a SOAP call, an exception is thrown by the server, and so on.

2>Business Fault

Business faults are application-specific faults that are generated when there is a problem with the information being processed (for example, when a social security number is not found in the database).

A Fault Handling Framework use Fault binding policy file (fault-policies.xml) and fault policy bindings file (fault-bindings.xml) to handle fault in SOA Suite. These file includes condition and action sections for performing specific tasks. They can be stored in the file location or in the Meta data repository. How these fault policy file work will be very clear once we will come up with an example.

Need for Fault Handling in SOA Suite

When you define a business Process you expect that it will work smoothly without any problem and ideally it works fine too but there are situations where in we do not expect the fault or error to come but still it comes Such as the sudden shut down of the machine, Some network issues and many more. In a good programming we should already define how to handle these situations otherwise this may cause a fatal. We may not define or expect what all kind of problems may occur but we can define some common error condition and remedy for them and for rest of the conditions which are unpredictable we can define a general policy as a remedy. Just think of a situation when you are doing an online transaction and all of a sudden it shows some error on the page saying you are not able to connect. You will prefer that a message should come that the server is currently under maintenance rather than showing an error in the page that is why a fault handling is required to handle some situation which are not ideal.

Problem definition

We will create a scenario first of all to understand what happens when we do not handle the fault and then we will try to handle the fault using the fault policy.

We will create a simple process where in we will intentionally throw a fault using throw activity in the Sub Process. This process will be called by the main process. We will see how this fault will be handled in the Business process when there is a Fault handling Framework defined to handle that error.

We will define the two policy file as per our requirement and will design it for one specific error.

High-level solution

We will define two policy file fault-policies.xml and fault-binding.xml file, these file will contain the condition where in fault will occur and the action to be taken in case of a fault.

A typical Fault policy file will have a format like this

<? Xml version="1.0" encoding="UTF-8"?>

<faultPolicies xmlns="http://schemas.oracle.com/bpel/faultpolicy"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<faultPolicy version="0.0.1" id="FusionMidFaults"

xmlns:env="http://schemas.xmlsoap.org/soap/envelope/"

xmlns:xs="http://www.w3.org/2001/XMLSchema"

xmlns="http://schemas.oracle.com/bpel/faultpolicy"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<Conditions>

<faultName xmlns:medns="http://schemas.oracle.com/mediator/faults"

name="medns:mediatorFault">

<condition>

<action ref="MediatorJavaAction"/>

</condition>

</faultName>

<faultName xmlns:bpelx="http://schemas.oracle.com/bpel/extension"

name="bpelx:remoteFault">

<condition>

<action ref="BPELJavaAction"/>

</condition>

</faultName>

<faultName xmlns:bpelx="http://schemas.oracle.com/bpel/extension"

name="bpelx:bindingFault">

<condition>

<action ref="BPELJavaAction"/>

</condition>

</faultName>

<faultName xmlns:bpelx="http://schemas.oracle.com/bpel/extension"

name="bpelx:runtimeFault">

<condition>

<action ref="BPELJavaAction"/>

</condition>

</faultName>

</Conditions>

<Actions>

<!-- Generics -->

<Action id="default-terminate">

<abort/>

</Action>

<Action id="default-replay-scope">

<replayScope/>

</Action>

<Action id="default-rethrow-fault">

<rethrowFault/>

</Action>

<Action id="default-human-intervention">

<humanIntervention/>

</Action>

<Action id="MediatorJavaAction">

<!-- this is user provided class-->

<javaAction className="MediatorJavaAction.myClass"

defaultAction="default-terminate">

<returnValue value="MANUAL" ref="default-human-intervention"/>

</javaAction>

</Action>

<Action id="BPELJavaAction">

<!-- this is user provided class-->

<javaAction className="BPELJavaAction.myAnotherClass"

defaultAction="default-terminate">

<returnValue value="MANUAL" ref="default-human-intervention"/>

</javaAction>

</Action>

</Actions>

</faultPolicy>

</faultPolicies>

Similarly a typical Fault-binding.xml file will have following format.

<?xml version="1.0" encoding="UTF-8" ?>

<faultPolicyBindings version="0.0.1"

xmlns="http://schemas.oracle.com/bpel/faultpolicy"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<composite faultPolicy="FusionMidFaults"/>

<!--<composite faultPolicy="ServiceExceptionFaults"/>-->

<!--<composite faultPolicy="GenericSystemFaults"/>-->

</faultPolicyBindings>

Solution details

The following section details out the setups required to recreate the problem scenario and then the solution steps.

Setups

SOA Suite 11.1.1.5

Oracle XE 11.2

RCU 11.1.1.5

Oracle WebLogic Server 10.3

Jdeveloper 11.1.1.5

Business flow

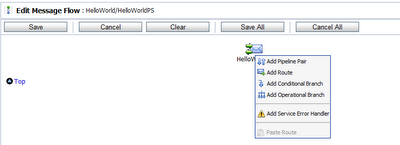

Steps to recreate the issue.

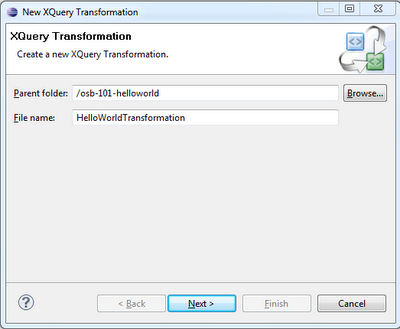

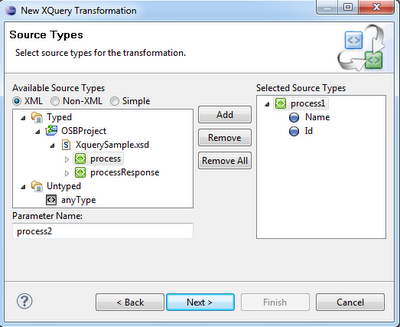

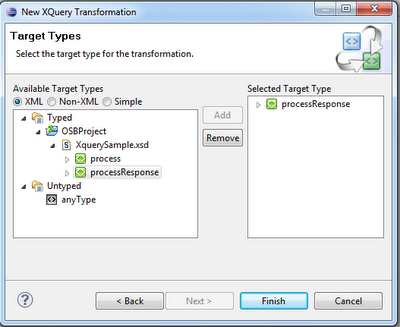

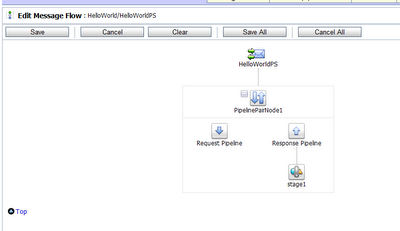

1. Create a Synchronous BPEL process in Jdeveloper.

Assign it some Logical name

Now drag and drop a bpel process in the composite panel as shown

Call this process also as a subprocess and also make this process as a synchronous process.

Join the two processes

2> Now open your SubProcess and drag and drop a Throw activity in between the receive and reply activity. Double click on the Throw activity and select Remote Fault from the list of System Faults as shown.

Create a Fault Variable also. Once you will Finish this wizard you will find that a wsdl file called RuntimeFault.wsdl is created with the following content.

<?xml version="1.0" encoding="UTF-8"?>

<definitions name="RuntimeFault"

targetNamespace="http://schemas.oracle.com/bpel/extension"

xmlns:xsd="http://www.w3.org/2001/XMLSchema"

xmlns="http://schemas.xmlsoap.org/wsdl/">

<message name="RuntimeFaultMessage">

<part name="code" type="xsd:string"/>

<part name="summary" type="xsd:string"/>

<part name="detail" type="xsd:string"/>

</message>

</definitions>

Now drag and drop an assign activity in between the receive activity and the throw activity and assign some random values to the fault variables.

So Your SubProcess should look something like this

2. Go to you MainProcess now.

Drag and drop an assign activity and call the SubProcess. Create the corresponding input and output variable for calling the SubProcess.

Now Drag and drop an assign activity after the invoke. This will assign the output of the SubProcess to the replyOutput of the MainProcess.This step is done intentionally in order to pass the fault to the client of the Main Process.

So the overall MainProcess will look something like this.

Now our Fault Process is ready we will deploy it to test what is the behavior we are getting.

On testing the process errored out because of the fault.

As you can see the process is faulted and there is no human intervention for the correction of this fault in the process.

Recovery

In order to recover from this situation we will create the fault policy to handle this situation manually through human intervention.

My Fault-binding.xml will look something like this.

<?xml version="1.0" encoding="UTF-8"?>

<faultPolicyBindings version="2.0.1"

xmlns="http://schemas.oracle.com/bpel/faultpolicy"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<component faultPolicy="bpelFaultHandling">

<name>CatchFault</name>

</component>

</faultPolicyBindings>

And my Fault-policies.xml will look like this.

<?xml version="1.0" encoding="UTF-8"?>

<faultPolicies xmlns="http://schemas.oracle.com/bpel/faultpolicy">

<faultPolicy version="2.0.1" id="bpelFaultHandling"

xmlns:env="http://schemas.xmlsoap.org/soap/envelope/"

xmlns:xs="http://www.w3.org/2001/XMLSchema"

xmlns="http://schemas.oracle.com/bpel/faultpolicy"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<Conditions>

<faultName xmlns:bpelx="http://schemas.oracle.com/bpel/extension"

name="bpelx:remoteFault">

<condition>

<action ref="ora-human-intervention"/>

</condition>

</faultName>

</Conditions>

<Actions>

<!-- This is an action will mark the work item to be "pending recovery from console"-->

<Action id="ora-human-intervention">

<humanIntervention/>

</Action>

</Actions>

</faultPolicy>

</faultPolicies>

There are two important things that you need to note down

1>if you can see in your falut-binding.xml you can see an entry like

component faultPolicy="bpelFaultHandling"

this is basically a reference id which is passed to the fault-policies.xml so corresponding to that

we have an entry in fault-policies.xml

faultPolicy version="2.0.1" id="bpelFaultHandling"

So the important thing is that this reference id should match in the two files.

In my case it is bpelFaultHandling in both the case.

2>You can again see in your fault-bindings.xml an entry like this

<name>CatchFault</name>

This CatchFault is the name of the BPELProcess which is catching the fault. In my case the name of process which is

catching fault is CatchFault.

Now again we need to make two more addition in to our composite to make him aware that fault policies are added

<property name="oracle.composite.faultPolicyFile">fault-policies.xml</property>

<property name="oracle.composite.faultBindingFile">fault-bindings.xml</property>

Here in my demo case I have used Human intervention as an action for the fault handling.

The two file fault-binding.xml and fault-policies.xml should be stored in the same location where we have our composite.xml.So the file structure will look like this.

And the Composite.xml will have entry like this.

Now we will test this scenario again by redeploying the process.

Results

Now if you test the process with fault policies in place you will get the following output in case of error.

As you can see now we have one recovery option coming in the result. Click on the recovery activity and you will get the following page.

Here you have multiple options, you can replay, rethrow abort or continue with another input as per your business requirement. Thus it allows human intervention to take care of the necessary action in case of a fault.

Further Scope

We have applied the fault handling concept for human intervention, This same concept can be used for other widely used practices that are using custom java code to handle the error and rejection handler.

Business benefits

Your Business Process will be ready for the unexpected errors hence the end user will not get shocked in case of process failure. The same Fault policies can be applied to multiple processes if we store the fault policies in meta data repository.

Conclusion

Fault handling if defined properly can be used to handle any kind of error that my come in any Business Process.

Reproduce issue in local Machine

1>Download and unzip the file in your local machine.

2>Open your Jdeveloper and import the .jpr file in to jdeveloper.

3>Deploy and test the project.