The prioritization of work in weblogic server is based on an execution model which takes into account user/admin defined parameters and actual performance of the server. Weblogic server allows developer to configure work manager to prioritize pending works and improve performance of process. In this exercise we will try to understand how we can configure work managers in SOA/OSB 12c to improve the performance of services.

We will have a look in OSB configuration first as it is straight forward and then we will check on SOA configuration.

WorkManager can be configured in OSB for proxy as well as business services.

Work Manager when configured on Proxy service is used to limit the number of threads running a proxy Service and Work Manager when configured for a Business service used to limit the number of threads that process response from the back end system. It is important to understand that work managers are used to prioritize work unlike throttling which is used to restricts the number of records. So there is a chance that you might loose some data when you have enabled throttling but with work manager you will not loose the data, it will be jsut the prioritization of service will change.

As per oracle documentation

https://docs.oracle.com/middleware/1213/osb/develop/GUID-7A9661AE-6FE5-4A92-A418-694A84D0B0BF.htm#OSBDV89434

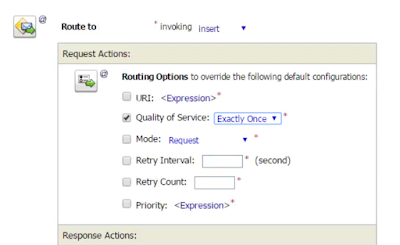

The Work Manager (dispatch policy) configuration for a business service should depend on how the business service is invoked. If a proxy service invokes the business service using a service callout, a publish action, or routing with exactly-once QoS (as described in Pipeline Actions), consider using different Work Managers for the proxy service and the business service instead of using the same for both. For the business service Work Manager, configure the Min Thread Constraint property to a small number (1-3) to guarantee an available thread.

Now without going much in to theoretical details we will see how we can configure work manager in OSB 12c. The concept is similar to that of 11g however the screens have changed for osb applications.

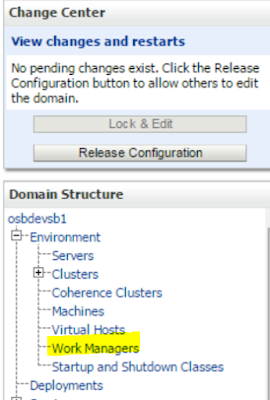

So we will first go ahead and login to admin console.

http://host:port/console

Go to Environment-->Work Managers

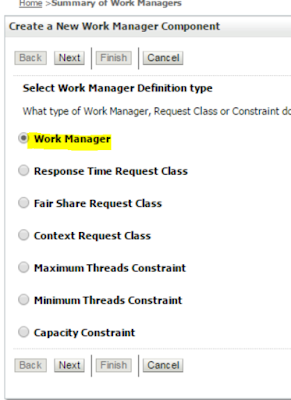

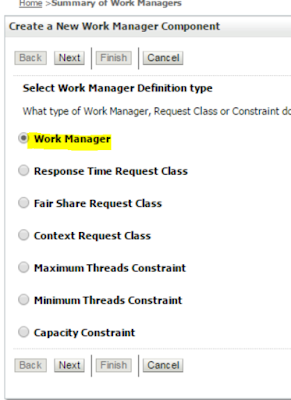

Create new and create a minimum thread constraint.

Minimum thread constraint ensure that whatever may be the load in the server this minimum number of

threads will be allocated to the service.

Give it a logical name and assign minimum thread

by default it is -1 which mean infinite.

Say next and point it to the server.

Next again go to the work manager and this time create a work manager

Give it some logical name

Next point it to server and finish the wizard

You can now go to your work manager and select the constraint you have defined

Save the changes.

Now once you have the work manager created you just need to attach this work manager to the business process in OSB.

Open up the osb console

http://host:port/sbconsole

Go to your business service

go to transport details

and select the work manager that you have created in the previous steps.

Save the changes and reactivate your session.

For configuring work manager in soa we do not much options.

SOA Services uses a defalt work manager called as wm/SOAWorkManager

YOu can configure your own constraints and update the work managers to use the contraints.